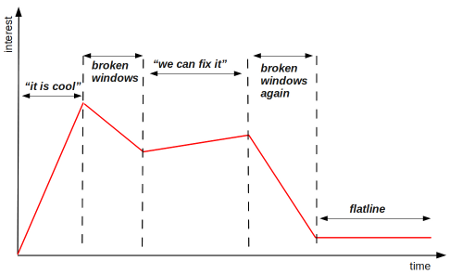

I really like continuous integration, that’s why I’m always sad when I see one dying. Unfortunately, I’ve seen it happen a lot, and in every case its lifeline looked like this:

The introduction of the continuous integration is usually started by an enthusiastic team member who read about it, realized “it was cool”, and it was just what the team needed. She quickly affects the whole team, they set up the build servers and are really happy with it.

The first fracture happens when the first unexpected failed build appears on account of a test case failure. Nobody knows why the test fails, because it works locally, also works on the server, but mysteriously sometimes it just doesn’t work in either of these places. The team is aware of the phenomenon, closes the case saying “don’t worry, we know that sometimes it fails” and moves forward. As time goes on, the team adds more test cases, and another failing test case appears on the horizon. Mysteriously, just as before. The same decision is made, because a team can live with two known failing test cases, can’t it? Unfortunately, this question signs the death sentence of the continuous integration build, because failing test cases decrease the faith in the system, and team members will start ignoring the results, because they are not trustworthy. Criminologists call this phenomenon broken windows syndrome:

Consider a building with a few broken windows. If the windows are not repaired, the tendency is for vandals to break a few more windows. Eventually, they may even break into the building, and if it’s unoccupied, perhaps become squatters or light fires inside. (source: wikipedia)

Occasionally, some of the team members pull themselves together and decide to “fix the build”. They might even get management support. Unfortunately, the broken windows are more powerful than them: while they are desperately trying to fix the test cases, other team members are going forward and building software based on an insecure base and creating more failing test cases: the broken windows are working behind the curtains. At the end, only the initiator checks on the build, because everybody else has already given up the fight with the windmills. This is the end of the continuous integration for the team: “flatline”.

For me, sporadically failing test cases are like diseases, and should be handled accordingly: until it is not known what the problem is, they should be separated from the reliable (healthy) test cases. If a team relies only on reliable test cases and keeps the unreliable ones in a test quarantine, then the team won’t lose its faith in continuous integration and it can live and support the team. Let’s see how to set up a test quarantine.

A test case fails sporadically, because of

-

a timing issue (either the test is too fast or too slow)

-

an external dependency problem (e.g. database bug, undocumented API etc)

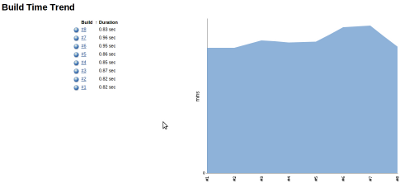

Let’s have a plain ruby project with rspec and cucumber under Jenkins based on a source from the brilliant RSpec Book. Before I applied any changes, its build trend looked like this:

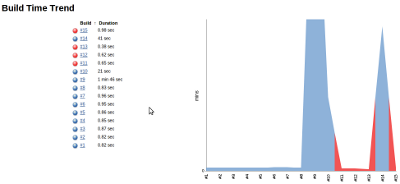

I did some changes right after build #8, and the trend has changed like this:

The output does not require further explanation, I think the result is familiar to everybody: increased execution times and sporadically failing builds - there was only one change between builds #8 and #9.

The first thing to do is to find the culprits who made the build red and increased the execution time. Finding the failing test cases is trivial, because their names are provided in the console output of the build:

Failures:

1) Codebreaker::Game#guess sends the mark to output

Failure/Error: output.should_receive(:puts).with('++++')

Double "output" received :puts with unexpected arguments

expected: ("++++")

got: ("+++")

# **./spec/codebreaker/game_spec.rb:31**

Finished in 0.12779 seconds

12 examples, 1 failureOn the other hand, finding the slow test cases might be tricky, but not impossible. Rspec has a nice functionality called profile. It only prints out the top 10 slowest test cases, but usually that’s more than enough:

~/temp/rspec-book/code/cb/47 $ **rspec --profile**

............

Top 10 slowest examples:

Codebreaker::Game#start sends a welcome message

0.00065 seconds ./spec/codebreaker/game_spec.rb:17

...

Codebreaker::Marker#exact_match_count with no matches returns 0

0.00022 seconds ./spec/codebreaker/marker_spec.rb:15

Finished in 0.11365 seconds

12 examples, 0 failures

~/temp/rspec-book/code/cb/47 $The usage format of cucumber prints out the step definitions with their execution times. The slowest ones come first:

~/temp/rspec-book/code/cb/47 $ **cucumber --format usage**

....---...............................................................

0.0007265 /^I should see "([^"]*)"$/ # features/step_definitions/codebreaker_steps.rb:41

0.0007590 Then I should see "Welcome to Codebreaker!" # features/codebreaker_starts_game.feature:10

0.0006940 And I should see "Enter guess:" # features/codebreaker_starts_game.feature:11

0.0003550 /^I am not yet playing$/ # features/step_definitions/codebreaker_steps.rb:23

0.0003550 Given I am not yet playing # features/codebreaker_starts_game.feature:8

0.0002970 /^I start a new game$/ # features/step_definitions/codebreaker_steps.rb:31

0.0002970 When I start a new game # features/codebreaker_starts_game.feature:9

22 scenarios (22 passed)

67 steps (67 passed)

0m15.076s

~/temp/rspec-book/code/cb/47 $Nothing interesting in the rspec report, but the codebreaker_steps.rb needed twice as much time to execute as the other steps in the first scenario of codebreaker_starts_game.feature: 0.0007265s vs. 0.0003550s. Now I know which test cases need to be separated from the others:

-

./spec/codebreaker/game_spec.rb:31(it fails sometimes) -

features/codebreaker_starts_game.featurefirst scenario (it is slow)

First, I have to find a way to mark them, and second, I need a way to run the test suites without them. I’m going to use the exclusion filter functionality of rspec…

describe "#guess" do

it "sends the mark to output"**, :sporadic => true** do

game.start('1234')

output.should_receive(:puts).with('++++')

game.guess('1234')

end

end

end…and the tagging functionality of cucumber for marking:

@slow

Scenario: start game

Given I am not yet playing

When I start a new game

Then I should see "Welcome to Codebreaker!"

And I should see "Enter guess:"Finally, I’m going to write rake tasks for the separation:

require 'rubygems'

require 'rspec/core/rake_task'

require 'cucumber/rake/task'

RSpec::Core::RakeTask.new(:spec) do |t|

t.rspec_opts = ['--format progress']

**t.rspec_opts << "--tag ~sporadic --tag ~slow"**

end

RSpec::Core::RakeTask.new(:spec_all) do |t|

t.rspec_opts = ['--format progress']

end

Cucumber::Rake::Task.new(:cucumber) do |t|

t.cucumber_opts = ["--format progress"]

**t.cucumber_opts << "--tag ~sporadic --tag ~slow"**

end

Cucumber::Rake::Task.new(:cucumber_all) do |t|

t.cucumber_opts = ["--format progress"]

endAs you can see, when someone executes the regular rspec and cucumber test cases, she will only see the result of the reliable test cases (~ means ignoring a tag). It is still wise to keep the possibility of executing the whole test suite as well, because it is important to monitor the separated test cases together with the existing ones: there might be other unsurfaced problematic test cases. Set up a completely separate *Jenkins project *for the whole test suite (spec_all*, cucumber_all`), which builds several times a day. With this approach, we’ve found several sporadically failing test cases, so it really paid off.

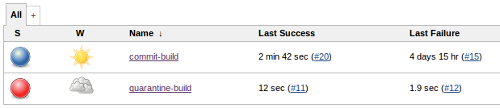

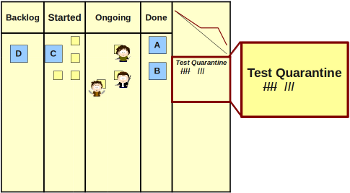

As you can see, the commit build is perfect (the sun means a continuous green build), and on the other hand the quarantine build is still sporadic (the clouds mean green and red builds).

One more thing. If your test cases are using database like in Ruby on Rails, use separate databases for them. I forgot to do this, and when the two Jenkins projects were running using the same database, they corrupted each others database and both failed.

Putting test cases into a quarantine won’t make your *continuous integration *better, and it won’t stop the spread, but it is a start. Remember when I wrote about the moment when my example team got divided into two parties? Well, the first party wanted a reliable test suite - check. The second party wanted to work uninterrupted on test cases and make them better - check. For me it is a win-win situation.

I recommend having a corner on your whiteboard - if you have any - where you count the number of test cases stated in the quarantine.** The team should commit to decrease the number of test cases stated in the quarantine week by week**. Another recommendation: if a test case is considered fixed, do not remove the tag immediately. Leave it in the quarantine for a couple of days or a week and if it is all green, then remove the tag.

The board above shows that the team has already fixed five test cases and they still have three, but the result is promising.

As a bonus, when a developer starts working on a feature and checks the code with the test cases, see will notice the sporadic or the slow mark. Then, she will know that she is going to work with a code base which does not have a reliable test suite, so she will be extremely careful when changing the production code. She might even fix a couple of quarantined test cases while she’s at it.

comments powered by Disqus